Wisconsin algorithm Part 2

I have two comics to draw but on Friday, I was asked a question about Wisconsin’s “Sum to 1” algorithm (S1). I tried not to think about it, but then I had an idea while taking a shower. I didn’t have the time to check it out, but couldn’t get it out of my mind. Yesterday, I spent about 12 hours on it (and 6 on one of the comics).

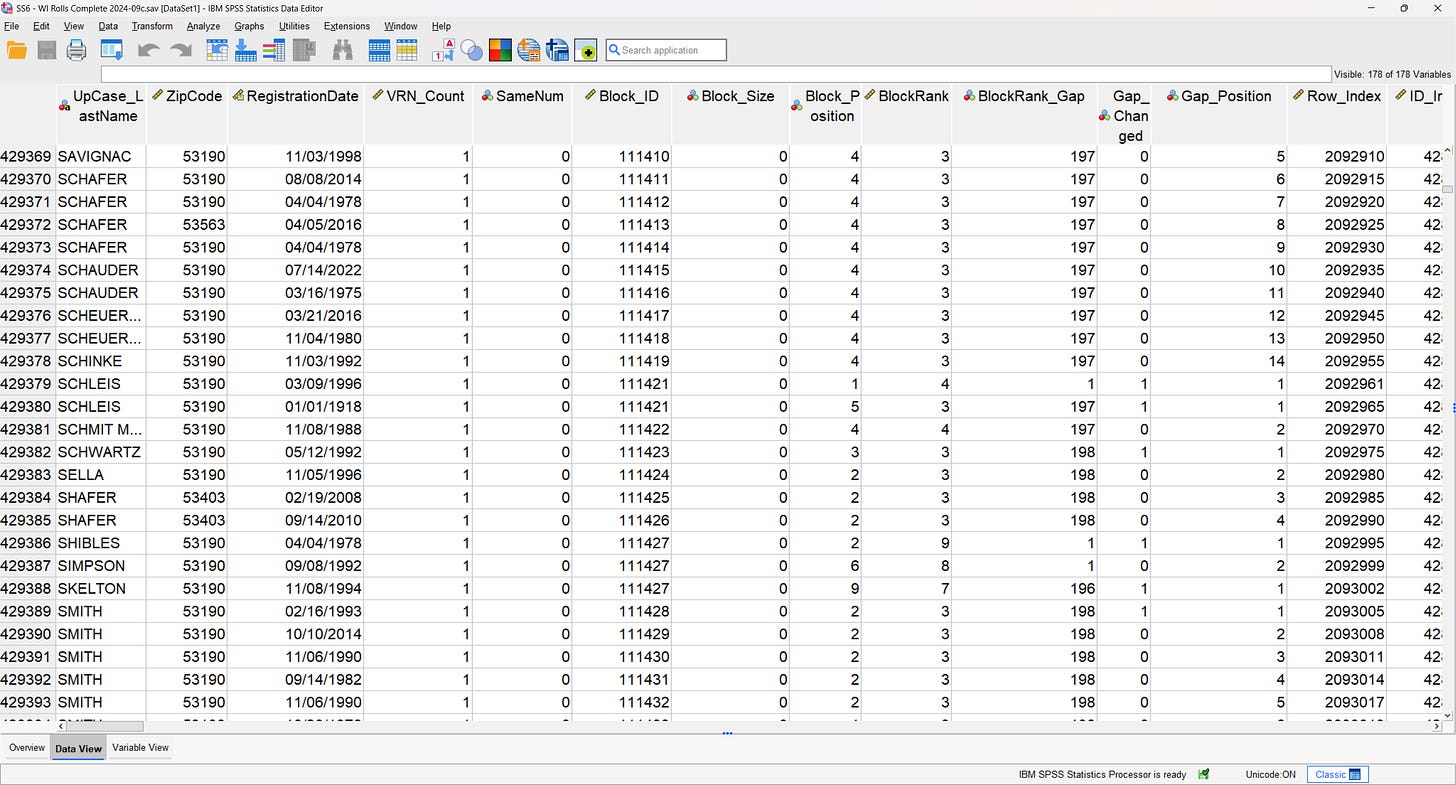

The S1 algorithm is the …